Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Musical Notes Classification and Recommendation Using Machine Learning

Authors: Shrikant Devidas Kharat, Sujay Sanjay Shekokar , Prajwal Sudhir Waghmare , Sarthak K. Hupare, Prof. Jayshree S. Mahajan

DOI Link: https://doi.org/10.22214/ijraset.2024.63390

Certificate: View Certificate

Abstract

The abstract explores the fundamental concept of understanding algorithms through data within the realm of machine learning, introducing its key paradigms: supervised and unsupervised learning. It provides an in-depth analysis of supervised learning, demonstrating how data classification is accomplished through various algorithms such as support vector machines (SVM), linear regression, logistic regression, neural networks, and nearest neighbour. Particularly emphasized is SVM\'s capacity to establish non-linear decision boundaries using kernel functions, showcasing its relevance in addressing practical challenges like face detection and handwriting recognition. The research outcomes underscore two significant points: the applicability of the PCP for describing chords in a machine- learning context and the algorithm\'s proficiency in recognizing chords played on diverse instruments, including those unseen during the training phase. This highlights the versatility of machine learning methodologies in chord recognition and their potential for real-world applications. Moreover, the study contributes to pattern analysis and classification within the broader domain of machine learning, presenting practical applications and advancements. The PCP feature vector, pivotal in the study, offers a robust depiction of chord characteristics, facilitating precise recognition across varied instruments and environments. Additionally, the development of a specialized chord database tailored to Western European music enhances the study\'s practical utility and provides a valuable resource for future research in music recognition systems. In essence, the abstract elucidates the significance of data-driven algorithms in machine learning and their practical implications across diverse domains. It underscores the efficacy of supervised learning techniques, particularly SVM, in tackling complex tasks such as classification. Furthermore, the findings demonstrate the adaptable nature of machine learning approaches in music recognition, showcasing their potential applicability in real-world contexts. Overall, the abstract contributes to a deeper understanding of machine learning principles and their practical implementation in addressing real-world challenges.

Introduction

I. INTRODUCTION

The field of Music Information Retrieval (MIR) [13] has advanced notably in recent years, largely due to the significant influence of machine learning techniques across various applications. Guitar chord lature recognition has become a prominent area within MIR, focusing on automating the transcription of guitar music from sources such as audio recordings or scanned/tabulated materials. The primary goal of this survey paper is to offer a comprehensive overview of existing research in guitar chord recognition utilizing machine learning techniques.

In this study, we introduce the fundamental concept of acquiring knowledge about algorithms through data within the realm of machine learning. We outline the two primary paradigms of machine learning: supervised and unsupervised learning, with a specific focus on supervised learning utilizing algorithms like support vector machines (SVM). The abstract also highlights the evolution of linear classification to the more sophisticated concept of SVM for generating non-linear decision boundaries using kernel functions, demonstrating its applicability in real-world scenarios such as face detection and handwriting recognition. The determination behind the study lies in exploring and understanding how machine learning algorithms, particularly support vector machines, can be effectively applied in practical contexts such as chord recognition in music. Leveraging the Pitch Class Profile (PCP) feature vector, we aim to showcase the efficacy of machine learning methods in recognizing complex patterns, even with limited attribute information, and enhance chord recognition capabilities across different musical contexts. The project scope entails implementing machine learning methodologies, particularly the feed-forward neural network, for chord recognition using the PCP feature vector. Additionally, we aim to design a comprehensive chord database tailored to Western European music to facilitate chord recognition across diverse instrument samples.

However, limitations may arise in terms of dataset size and diversity, potential computational constraints, and variations in chord structures across different musical genres, impacting the generalizability and scalability of the proposed machine-learning approach. The methodology involves employing a machine learning approach, specifically a feed-forward neural network, to address the chord recognition challenge in music. By leveraging the PCP feature vector, our methodology aims to accurately classify and recognize chords based on limited attribute information, with the potential utilization of support vector machines (SVM) and other classification algorithms to enhance the chord recognition process and showcase the robustness and adaptability of machine learning techniques in complex pattern recognition tasks.

The pitch class profile (PCP) [14] is a crucial tool in music analysis, revealing how pitch classes are distributed within a piece regardless of their octave. It provides valuable insights into the harmony and structure of the music, facilitating comparisons across different compositions or sections. Typically depicted through histograms or graphs, with pitch classes plotted on the x-axis and frequencies on the y-axis, the PCP allows analysts to identify recurring patterns, tonal centres, and harmonic progressions within the music. Widely utilized in music theory, composition, and computational music analysis, the PCP aids in understanding musical structures and styles. Additionally, the paper will explore the challenges encountered in guitar chord recognition, including complexities in playing techniques and setups, alongside limitations in available datasets. It will also discuss current techniques, evaluation metrics, and potential future research directions in this field. By providing a comprehensive survey of guitar chord recognition using machine learning, the paper aims to be a valuable resource for researchers, practitioners, and enthusiasts seeking to develop accurate tab recognition systems to meet the evolving demands of the music community.

II. LITERATURE SURVEY

Numerous research studies have explored the use of various machine learning techniques, including Support Vector Machine(SVM) and libraries such as StreamLit UI, Long File Profiler, shutil, os, sys, pydub, AudioSegment, numpy, fft, log2, wavfile, for guitar chords recognition.

In Paper 1 [1], the evolution of machine learning is examined, starting from early linear classification and regression techniques to the advent of support vector machines (SVM). The paper discusses the transition to SVMs, driven by the need for non-linear decision boundaries in certain datasets. SVMs are recognized for their effectiveness in classification and regression tasks, as they can construct hyperplanes in multidimensional space to separate different class boundaries based on features. The introduction of kernels, which act as similarity functions, enables SVMs to handle non-linear classification. The paper explores various types of SVM classifiers, including linear and non-linear variants, and their applications in fields such as spam filtering, facial detection, text categorization, bioinformatics, and environmental disaster prediction. Additionally, the paper highlights the importance of kernels like polynomial, sigmoid, and radial basis functions in enabling SVMs to handle non-linear decision boundaries. Overall, the paper underscores the significance of SVMs in managing complex datasets and their broad practical utility across diverse domains.

In Paper 2 [2], we discuss the importance of characterizing music content for efficient music information retrieval, highlighting the inadequacy of text-based annotations for describing music content. It presents Pitch Class Profile (PCP) features as a suitable method for representing chords due to their robustness across different instruments. The document emphasizes the use of machine learning techniques to address the challenges posed by variations in chords caused by instruments, noise, and recording conditions. By establishing a database comprising recorded guitar chords under various acquisition conditions and samples from other instruments, the document demonstrates that PCP is effective for describing chords in a machine-learning context and capable of recognizing chords played on different instruments. The study aims to enhance automatic chord recognition in music, catering to the growing need for advanced music information retrieval systems in the digital age.

Paper 3 [3] introduces an innovative model design for automated guitar transcription. The proposed algorithm merges an attention mechanism with a convolutional neural network (CNN) to transcribe guitar notes at the note level accurately. The paper illustrates how this architecture effectively addresses the challenges associated with guitar transcription, including polyphonic sound, varying pitch, and timbre. By utilizing the attention mechanism, the model can concentrate on critical aspects of the input audio signal, thereby improving transcription accuracy. In summary, the paper makes a significant contribution to the advancement of automatic guitar transcription by offering a reliable and effective methodology for note-level transcription.

Paper 4 [4] presents an innovative method for automatically detecting piano music notations by leveraging deep learning techniques. The proposed algorithm adopts a multilayer convolutional stacking approach and gate activation functions to design a model structure without relying on a pooling layer, drawing inspiration from the Pixel CNN architecture. The paper thoroughly describes the methodology, encompassing preprocessing steps, model architecture, and training procedures. Through conducted experiments, the paper assesses the effectiveness of the proposed method using various evaluation metrics.

The findings demonstrate the deep learning-based approach's accuracy and efficiency in recognizing piano music notations. This paper offers valuable insights to researchers exploring music recognition and deep learning. By studying this paper, individuals can gain insights into applying deep learning techniques in piano music notation recognition, and understand the model's architectural design choices, optimization techniques, and performance evaluation methods. Additionally, the paper underscores the potential for further advancements in music recognition through deep learning algorithms.

Paper 5 [5] explores the application of deep learning techniques for recognizing and analyzing musical form. The paper introduces a pick-picking algorithm that approximates the location of musical segments, providing an array of timestamps. This algorithm utilizes deep learning models to analyze audio signals and identify structural elements such as verses, choruses, bridges, and other sections in a piece of music. The paper aims to enhance our understanding of musical form and structure through computational analysis. It discusses the methodology, model architecture, and training process involved in training the deep learning models for music form recognition. The results obtained from the experiments conducted using the proposed algorithm demonstrate its effectiveness in accurately identifying and segmenting musical sections. We can learn several valuable lessons from this paper. Firstly, they can gain insights into applying deep learning techniques in music analysis and recognition tasks. They can understand how to preprocess audio data, and design and train deep learning models for music analysis. Additionally, we can learn about the concept of musical form and its significance in music theory and composition. This paper provides a foundation for future research in the field of music analysis using deep learning algorithms and opens up possibilities for automated music form recognition in various applications like music recommendation systems and automated music composition tools.

Paper 6 [6] focuses on the application of Convolutional Neural Networks (CNN) in recognizing the position and musical notation of music notes in Optical Music Recognition (OMR) systems.In this research, they propose an algorithm that utilizes CNN for music note position recognition in OMR. Optical Music Recognition is a field that deals with the automatic extraction of music symbols such as notes, rests, and dynamics from sheet music images. By using CNN, the algorithm can analyze and categorize the music notes present in the images accurately. This paper can gain insights into the application of deep learning techniques, specifically CNN, in the field of OMR. They will learn about the challenges associated with music note recognition in OMR systems, such as variations in music notation and image quality. The paper also provides an understanding of how CNNs can be trained and used for accurate position recognition and classification of music notes.

Paper 7 [7] provides valuable insights into the field of music transcription. This paper proposes an algorithm for converting audio notes into four different transcripts using four distinct categories. The first category is the frame-level transcript, which involves analyzing the audio at a short time interval and detecting the presence of musical notes. The second category, note-level transcript, aims to accurately identify the pitch and timing of individual notes. The third category, stream-level transcript, focuses on grouping notes that belong to the same musical stream or instrument. Finally, the notation-level transcript aims to represent the transcribed music using conventional musical notation. By studying this paper, we can learn about the challenges associated with automatic music transcription and the various levels of transcription that need to be addressed. They can gain an understanding of the algorithms and techniques used for analyzing audio signals and extracting musical information. Moreover, we can benefit from the authors' proposed approach for handling different aspects of transcription, including frame-level analysis, note-level identification, stream-level grouping, and notation-level representation.

Paper 8 [8] delves into the utilization of deep predictive models within the realm of interactive music. The authors introduce an algorithm employing prediction techniques at the instrument, performer, and ensemble levels. This paper offers valuable insights into interactive music, showcasing the potential of deep learning models in this domain. Readers can deepen their understanding of interactive music and how deep learning approaches can enhance it. The paper covers various levels of prediction in music, including individual instrument behaviour, performer actions, and overall ensemble dynamics. By studying the algorithm proposed in this paper, readers can explore the implementation of deep learning techniques in interactive music settings. They will gain insight into the training process for deep predictive models to generate responsive and interactive musical compositions. Additionally, the paper underscores the importance of considering multiple levels of prediction to craft cohesive and immersive interactive music experiences.

Paper 9 [9] explores the utilization of deep neural networks for processing music score images efficiently. The paper introduces an algorithm designed to tackle the challenge of accurately segmenting various elements within a music score image, including the background, staff lines, music notes, and text. This paper offers valuable insights into the domain of document processing for music scores and underscores the potential of deep neural networks in this context. Readers can develop a thorough understanding of the complexities associated with processing music score images and the specific hurdles involved in accurately segmenting different components.

By examining the algorithm outlined in this paper, readers can gain insights into the practical application of deep learning techniques for document processing in music scores. They will acquire knowledge about the steps required to effectively separate the background, staff lines, music notes, and text, which are crucial for subsequent analysis and interpretation.

Paper 10 [10] introduces a system based on deep learning for automating the transcription of guitar music. The paper proposes an algorithm that integrates Convolutional Neural Networks (CNNs), Recurrent Neural Networks (RNNs), and Attention Mechanisms to achieve precise transcription of guitar music from audio recordings. This paper offers valuable insights into the realm of automatic music transcription, with a specific focus on guitar music. Readers can grasp the challenges involved in transcribing guitar music and the utilization of deep learning techniques to overcome these obstacles. By reviewing the algorithm outlined in this paper, readers can understand the practical application of CNNs, RNNs, and Attention Mechanisms for guitar transcription. They will acquire knowledge about training deep learning models using audio data and employing them to transcribe guitar music accurately, including the identification of individual notes and chords.

Paper 11 [11] presents an algorithm that enhances the accuracy of guitar riff transcription by combining an attention model with LSTM networks. The paper offers valuable insights into the domain of guitar transcription, with a specific emphasis on the challenges associated with capturing the nuances of guitar riffs. Readers can understand the potential advantages of incorporating attention models to focus on relevant sections of the guitar audio signal selectively. Additionally, the paper discusses the implementation of LSTM networks to capture the temporal patterns and dependencies within guitar riffs, which are essential for achieving accurate transcription. By examining this paper, readers can gain knowledge about the overall process of transcribing guitar riffs, ranging from pre- processing the audio signal to predicting the corresponding notes or chords.

Paper 12 [12] introduces a novel approach for accurately transcribing guitar music. The paper proposes an algorithm that combines Probabilistic Latent Component Analyzers (PLCA) with Automated Transcription Models to tackle the challenges associated with guitar transcription. This paper provides valuable insights into the field of guitar transcription, particularly highlighting the benefits of using PLCA for decomposing and modelling guitar audio signals. By studying this paper, we can learn about the advantages of PLCA in capturing the complex interactions between different components of guitar music, enabling the accurate transcription of individual notes, chords, and other playing techniques. Additionally, it presents the implementation of Automated Transcription Models, specifically a convolutive mixture of PLCA models, which further enhances the accuracy of guitar transcription. This paper offers a comprehensive understanding of the guitar transcription process and the potential applications of PLCA and Automated Transcription Models.

|

Paper |

Machine Learning Technique |

Key Points |

|

Paper 1 |

Support Vector Machine (SVM) |

- Evolution from linear techniques to SVM - SVM constructing hyperplanes for non-linear decision boundaries - Application in spam filtering, facial detection, etc. - Importance of kernels like polynomial, sigmoid, radial basis functions |

|

Paper 2 |

Pitch Class Profile (PCP) Features |

- Efficient music information retrieval using PCP features - Utilizing machine learning to address chord variations due to instruments, and noise - Database creation for chord recognition |

|

Paper 3 |

CNN with Attention Mechanism |

- Automatic guitar transcription using CNN and attention mechanism - Handling challenges of polyphonic sounds, pitch variation, timbre |

|

Paper 4 |

Deep Learning for Piano Music Notations |

- Novel approach using deep learning for piano music recognition - Experimentally proven effectiveness |

|

Paper 5 |

Deep Learning for Musical Form Recognition |

- Deep learning for music form analysis - Algorithm for segmenting musical sections |

|

Paper 6 |

CNN for Optical Music Recognition |

- CNN for music note position recognition in OMR - Challenges and solutions in the OMR system |

|

Paper 7 |

Audio Notes Transcription Algorithm |

- Frame-level, note-level, stream-level, notation-level audio transcription - Various levels of transcription and challenges involved |

|

Paper 8 |

Deep Predictive Models in Interactive Music |

- Using deep learning for instrument, performer, and ensemble predictions - Enhancing interactive music through deep learning models |

|

Paper 9 |

Deep Neural Networks for Music Score Processing |

- Processing music score images using deep neural networks - Segmenting different elements accurately in music score images |

|

Paper 10 |

CNN, RNN, Attention for Guitar Transcription |

- Automatic guitar music transcription using CNNs, RNNs, and Attention Mechanism - Deep learning for accurate guitar music transcription |

|

Paper 11 |

LSTM with Attention for Guitar Riff Transcription |

- Attention model and LSTM networks for accurate guitar riff transcription - Capturing temporal patterns and dependencies in guitar riffs |

|

Paper 12 |

PLCA with Automated Transcription Models |

- PLCA and Automated Transcription Models for guitar transcription - Decomposing guitar audio signals with PLCA, enhancing accuracy |

III. METHODS AND PROCEDURES

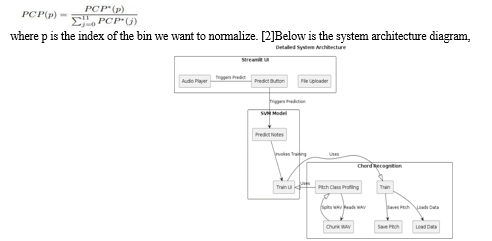

Python is utilized for both front-end and back-end development. The project employs various libraries and modules such as SVM Model, Pitch Class Profile, StreamLit UI, Long File Profiler, shutil, os, sys, pydub, AudioSegment, numpy, fft, log2, and wavfile. Frontend technologies include Python, while backend technologies consist of json and python. Python has been chosen as the primary language due to its versatility, ease of use, and the wide range of libraries available for tasks such as data manipulation, machine learning, and user interface development. The SVM Model library is utilized to create Support Vector Machine (SVM) models, which are known for their effectiveness in tasks like musical chord classification. The Pitch Class Profile module is essential for extracting key features from audio data, enabling the models to learn the patterns of musical chords effectively. StreamLit UI is used to develop a user-friendly interface, allowing smooth interaction with the application. The Long File Profiler module efficiently handles longer audio files, which is crucial for processing entire musical compositions. Other libraries like shutil, os, and sys help with file manipulation and system operations. Pydub and AudioSegment are employed for audio file manipulation, while numpy provides efficient numerical operations. The fft and log2 modules play a vital role in Fourier transformation, an important step in extracting frequency domain features from audio signals. Wavfile is utilized for reading and writing WAV files, a common audio file format. Python is also used for frontend technologies, while JSON and other Python libraries serve as backend technologies for data processing. Overall, these choices of libraries, languages, and modules provide a comprehensive framework for musical chord classification and recommendation using machine learning, ensuring efficient data processing, feature extraction, model building, and user interaction.

The project comprises multiple modules aimed at fulfilling specific functions within the WAV File Note Predictor application. In the "SVM Model" module, the primary focus lies in predicting notes from uploaded WAV files by utilizing the `train_ui` function to train Support Vector Machine (SVM) models. The "Streamlit UI" module facilitates user interaction via Streamlit, enabling file uploads and playback. Upon clicking the "Predict" button, the system processes the file, predicts notes, and displays the results. The "Pitch Class Profiler" module is responsible for extracting crucial pitch data from audio samples, while the "Long File Profiler" subclass segments longer audio files for more efficient profiling. Lastly, in the "Training and Prediction" module, various machine learning models such as KNN, AdaBoost, Decision Tree, and SVM are trained using extracted pitch profiles to predict notes from audio files. To ensure consistency in comparing Pitch Class Profile (PCP) vectors across trials where chord energy distribution may vary, normalization is necessary. This involves dividing the energy of each bin by the total energy of the original PCP vector. To normalize a PCP vector, we divide the energy of each bin by the total energy of the original PCP, that is,

In Step 1, the user interface setup involves creating an interface using Streamlit, prompting users to upload .wav files. Once uploaded, the file is displayed using the`st.audio` function, and upon clicking "Predict," the system processes the file.

Moving to Step 2, the WAV file is chunked using the`chunk_wav` function, splitting it into smaller 0.5-second segments saved as individual .wav files in the "chunks" directory.

Step 3 introduces pitch class profiling with the`PitchClassProfiler` class, responsible for computing the Pitch Class Profile (PCP) from audio samples. This class encompasses methods for reading the WAV file, computing the Fourier transform, and calculating the PCP for each segment, visualizing the results as bar graphs using`matplotlib`.

Step 4 delves into training machine learning models, starting with the `save_pitch` function extracting pitch classes and genre labels to a JSON file. The `load_data` function then loads the training dataset, followed by training various models (K-Nearest Neighbors, AdaBoost, Decision Tree, SVM with linear and RBF kernels) on pitch class profiles and predicting notes from test data.

Finally, Step 5 handles user interaction and output, where running the program from the command line expects a song name argument. The `main` function utilizes this argument to call the `train` function, printing predicted notes for each model. In summary, the process begins with users uploading a .wav file, which is then split into segments and saved for further processing. Pitch Class Profile (PCP) computation is performed on each segment, extracting essential pitch data. Machine learning models are trained on this PCP data, enabling them to predict notes from audio files. The predicted notes are then printed for each model, providing users with insights into the musical content of the uploaded audio. Overall, this algorithmic flow enables the automatic prediction of musical notes from uploaded audio files using machine-learning models trained on PCP features.

The testing process engulfs two primary categories: manual testing and the execution of test cases along with their ramifications. Manual testing comprises two essential components: User Interface Testing and Functionality Testing. In User Interface Testing, evaluators evaluate the behaviour of the Streamlit interface by uploading .wav files, confirming the functionality of audio playback, and validating the accurate display of predictions upon activating the "Predict" button. Functionality Testing entails scrutinizing individual functions like chunk_wav, PitchClassProfiler, train_ui, and train to verify their intended performance.

The document emphasizes the importance of employing a learning approach based on actual samples to achieve optimal performance in identifying musical chords. It highlights the effectiveness of training models using both noise-free and noisy chord samples, demonstrating that a mixed learning set results in more resilient models. Moreover, it underscores the applicability of Pitch Class Profile (PCP) features for representing chords across different instruments and the role of machine learning methods in handling diverse chord representations. Additionally, the conclusion centres around Support Vector Machines (SVM) and their models, which are pivotal for classification and regression analysis with linear and nonlinear decision boundaries.

The paper discusses SVM's significance in various domains like bioinformatics and predictive control of environmental disasters. Despite some limitations, SVM remains valuable for tasks such as spam filtering and facial detection, renowned for its precision and optimization compared to alternative algorithms. The document also touches upon evaluation criteria for classifiers and the challenge of selecting the appropriate kernel function to maximize class separability in datasets. Drawing from these concepts, the iterative Agile Model aligns closely with the discussed software development approach. Agile underscores flexibility, customer collaboration, and iterative development, all of which resonate with the ongoing enhancement of the music information retrieval system. Agile's principles, including individuals and interactions, working software, customer collaboration, and responding to change, are highlighted. The Agile Manifesto principles prioritize self-organization, working software demos for customer feedback, continuous customer collaboration, and swift responses to changes in development. This model allows for iterative testing, validation with subsets of chords, and adaptation to evolving requirements.

V. FUTURE WORK

In summary, this paper establishes a strong groundwork for advancing automatic music transcription systems by adapting methodologies initially developed for guitar transcription. This adaptation opens up possibilities for automating transcription across diverse musical instruments, potentially revolutionizing our engagement with music. The incorporation of attention mechanisms and deep-learning models offers exciting prospects for real-time transcription systems, enhancing musical experiences during live performances and practice sessions by providing immediate feedback. Looking ahead, optimizing these systems for efficiency and accuracy, particularly in real-time settings, becomes crucial, requiring the expansion of training datasets and enhancements in user interfaces to ensure widespread usability. Furthermore, exploring interdisciplinary applications, such as integrating chord recognition with speech recognition or audio editing tools, promises to unveil new avenues and underscore the adaptability of these technologies, potentially reshaping music creation and performance in the digital era.

Further exploration entails evaluating and comparing various deep-learning models and attention mechanisms specifically for automatic guitar transcription. This involves scrutinizing the effectiveness of models like Convolutional Neural Networks (CNNs) or Recurrent Neural Networks (RNNs) in capturing intricate chord progressions, alongside continuous improvements in machine learning models like Support Vector Machines (SVMs) to bolster chord recognition accuracy and resilience. Optimizing systems for real-time chord recognition in live performances or streaming applications remains a promising direction, necessitating the reduction of processing latency while maintaining high accuracy for seamless integration into musical contexts. Expanding and diversifying training datasets to encompass various music genres, instruments, playing styles, and audio qualities is essential for enhancing system performance and adaptability. Additionally, enhancing user interfaces to cater to the needs of musicians and music enthusiasts, and exploring cross-domain applications such as integrating chord recognition with speech recognition or audio editing software, can showcase the versatility and wide-ranging impact of these systems across different domains.

Conclusion

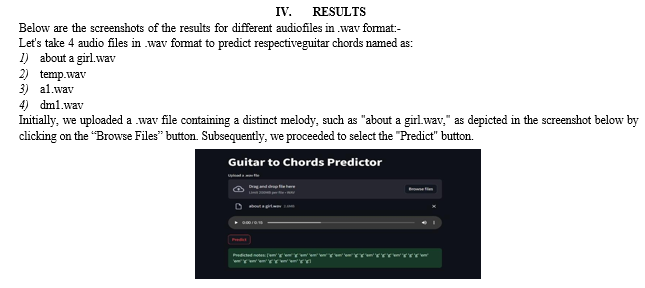

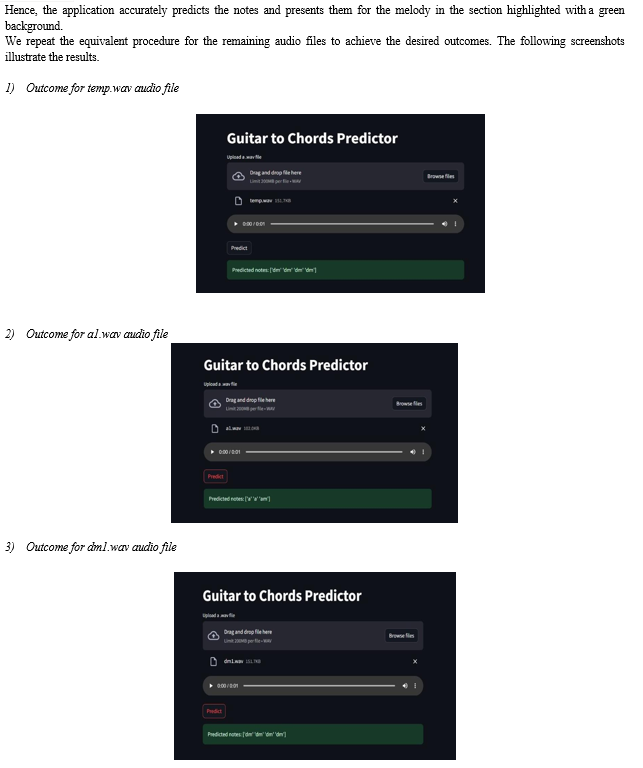

In conclusion, the application triumphantly meets the envisaged output by accurately foretelling the notes from the uploaded .wav file containing a clear melody. Following the methodology, where users upload the file and click on the \"Predict\" button, the application performs as intended, providing accurate predictions of the notes in the melody. Moving forward, users can rely on this application to expediently analyze and display the predicted notes for any given melody, contributing to streamlined music analysis processes.

References

[1] A Study on Support Vector Machine based Linear and Non-Linear Pattern Classification- Sourish Ghosh, Anasuya Dasgupta, Aleena Swetapadma,- ICISS, 2019, https://ieeexplore.ieee.org/document/8908018 [2] J Osmalskyj, J-J Embrechts, S Piérard, M van Droogenbroeck. NEURAL NETWORKS FOR MUSICAL CHORD RECOGNITION. Journées d’Informatique Musicale, 2012, Mons, France, https://hal.science/hal- 03041758/ [3] Note-level Automatic Guitar Transcription Using Attention Mechanism- Sehun Kim, Tomoki Hayashi, Tomoki Toda,2022, https://eurasip.org/Proceedings/Eusipco/Eusipco2022/pdfs/00 00229.pdf [4] Retracted: A Deep Learning-Based Piano Music Notation Recognition Method- Chan Li, Le Sun,2022, https://www.ncbi.nlm.nih.gov/pmc/articles/PMC9184194/ [5] Deep Learning from Musical Form: Recognition and Analysis- Szelogowski, Daniel James,2022, https://minds.wisconsin.edu/handle/1793/83743 [6] Music note position recognition in optical music recognition using convolutional neural network Andrea, Pauline and Amalia Zahra,2021, https://www.researchgate.net/publication/352656584_Music_ note_position_recognition_in_optical_music_recognition_usi ng_convolutional_neural_network [7] Automatic Music Transcription- Emmanouil Benetos, Simon Dixon, Zhiyao Duan, Sebastian Ewert, 2019, https://ieeexplore.ieee.org/document/8588423 [8] Deep Predictive Models in Interactive Music- Charles P.Martin, Kai Olav Ellefsen, Jim Torresen,2018, https://arxiv.org/abs/1801.10492 [9] Deep Neural Networks for Document Processing of Music Score Images- Jorge Calvo-Zaragoza. Francisco J. Castellanos, Gabriel,Vigliensoni,Ichiro Fujinaga, 2018, https://www.researchgate.net/publication/324730458_Deep_ Neural_Networks_for_Document_Processing_of_Music_Sco re_Images [10] Deep Jammer: A Deep Learning Based System for Automatic Guitar Transcription- Jingyong Zhang, Yuxia Yan,2019 [11] Transcribing Guitar Riffs using Attention Model-Reuben George Raj,2019 [12] Guitar Transcription using a Convolutive Mixture of Probabilistic Latent Component Analyzers Cesare Furlanello, Massimiliano Serafini, Giuseppe Jurman, Roberto Visintainer, 2018 [13] Music Information Retrieval: Recent Developments and Applications, Markus Schedl; Emilia Gómez; Julián Urbano, https://ieeexplore.ieee.org/document/8187204, 2014 [14] Improving Pitch Class Profile for Musical Chord Recognition Combining Major Chord Filters and Convolution Neural Networks, Suwatchai Kamonsantiroj; Lita Wannatrong; Luepol Pipanmaekaporn, https://ieeexplore.ieee.org/document/8113367, 2017

Copyright

Copyright © 2024 Shrikant Devidas Kharat, Sujay Sanjay Shekokar , Prajwal Sudhir Waghmare , Sarthak K. Hupare, Prof. Jayshree S. Mahajan. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET63390

Publish Date : 2024-06-21

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online